Last November (25th-29th) I took part of the Choreographic Coding Lab, from the MotionBank Project. An exploratory laboratory focused on translating aspects of dance and choreography into digital forms.

Below there are the two project/prototypes that I was working during that week.

1// LINES

I started by digging into the motion data from the performance of Jonathan Burrow’s and Matteo Fargion (more info about the performance here). The performance has been recorder in video and the skeleton tracking data was also recorded in a database.

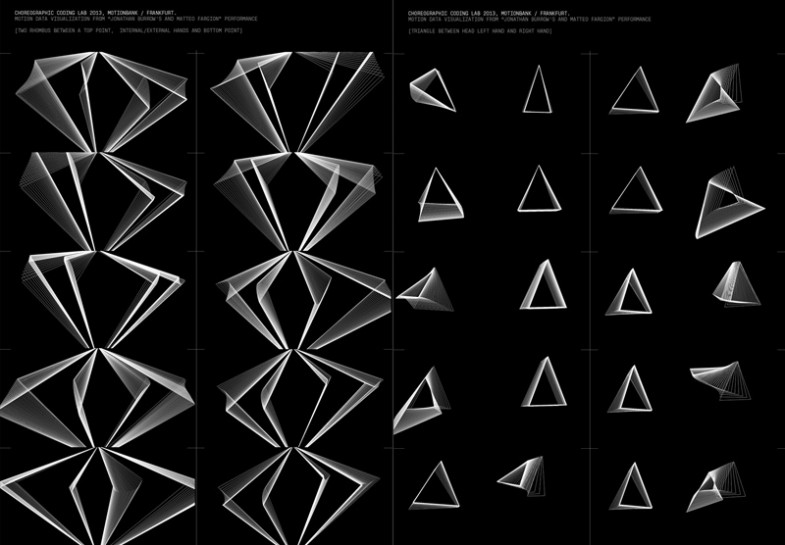

In the performance we watch to a gesture dialogue between both performers. Inspired by “William Forsythe: Improvisation Technologies” I decided to use lines to join different body joints to extract graphical patterns based on the their gestures in time.

Using has a starting point the data parser made by Florian Jenett in Processing, I started a series of experiments connecting different joints with lines (hand with hand, head to both hands, …) and watching its motion over the time.

In the end I got some interesting visual patterns. A geometrical graphical dialogue, a visual abstraction of the original performance.

Bellow two posters showing different geometric sequences. Check more in here.

/ Below a video render with several different visualizations:

The processing files used can be downloaded in my Github account here.

*NOTE: They are based on the initial processing files by Florian Jenett. It uses a Library (de.bezier.guido.*) that has not been updated to Processing 2.0. So, run it in Processing 1.5

// GRAVITATIONAL FIELD

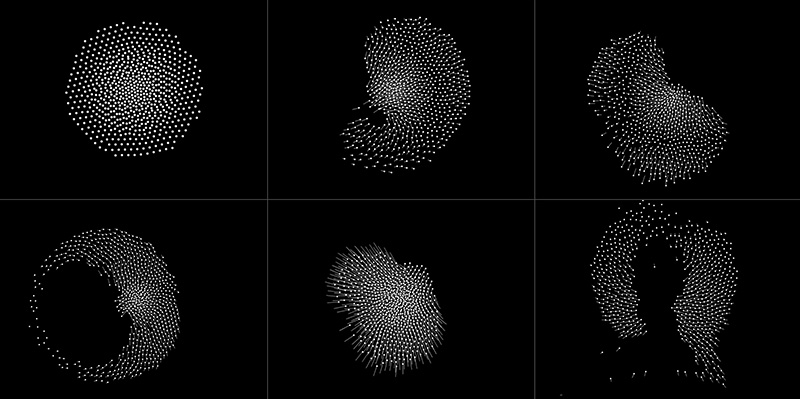

Some participants of the lab built an interactive dance space that was continuously broadcasting motion data (live and recorded). I wanted to use that live data so I started to work on an interactive visualisation.

I wanted to do not just a visualisation of the movement, but an interactive system that receive info from the dancer and gives him something back to interact/play and by this way influence his movements. Something in the borderline of a visual tool and a gaming experience.

So I used toxic.physics library to developed a gravitational particle system with a central force in the middle of the screen that attracts or repels the particles, and 3 more additional forces to interact with. One for the mouse (for debug proposes), and two more F1 F2 (one for each hand).

/ Below some still pictures from the gravitational system. Check more picture in my flickr gallery here.

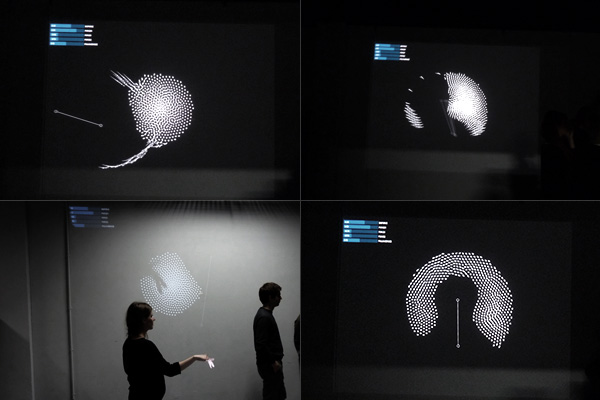

During the development of this system I used the motion data that was being broadcaster.

Unfortunately I didn´t have time to test it properly with a performer directly interacting with the visualisation, but I intent to do it in a near future.

/ Below some pictures from the lab with the application receiving live motion data

/ Below a screen capture from the system receiving live motion data:

You can download the “Processing” files here. It has a GUI where you can control the amount of street of each force (attract/repel). I created two forces to interact, and I imagined that they would be controlled by the hands of the performer. (position data comes in by OSC msg

For more information and updates about the Choreographic Coding Lab keep track of NODE FB page and MotionBank site, FB, and Twitter , where more documentation and project reports from other participants will be posted in the future.